Project 5.11: "Personal Assistant Robot"

What you’ll learn

- ✅ Voice command (simulated): Detect claps or loud sounds and map them to simple “voice” commands.

- ✅ Perform tasks: Run small service tasks like “BRING”, “WATCH”, and “ANNOUNCE”.

- ✅ App + voice interaction: Accept commands from both the app and sound input safely.

- ✅ Routines: Program morning/afternoon routines that chain multiple tasks.

- ✅ Preference learning: Remember what the user likes and adapt future actions.

Key ideas

- Short definition: A personal assistant robot listens, decides, and acts—then learns your preferences.

- Real‑world link: Smart speakers and service robots mix voice, app controls, and habits to help people.

Blocks glossary (used in this project)

- Digital input (Pin 34): Reads sound sensor (1 loud, 0 quiet) for clap‑based “voice” cues.

- Bluetooth init + callback: Receives app commands instantly.

- Digital outputs (motor driver pins 18, 19, 5, 23): Drive L298N for basic movements.

- OLED shows: Displays status like TASK, ROUTINE, and PREFER.

- Serial println: Sends clean “KEY:VALUE” strings the app can read.

- Variables + lists: Store tasks, routines, and user preferences.

What you need

| Part | How many? | Pin connection |

|---|---|---|

| D1 R32 | 1 | USB cable (30 cm) |

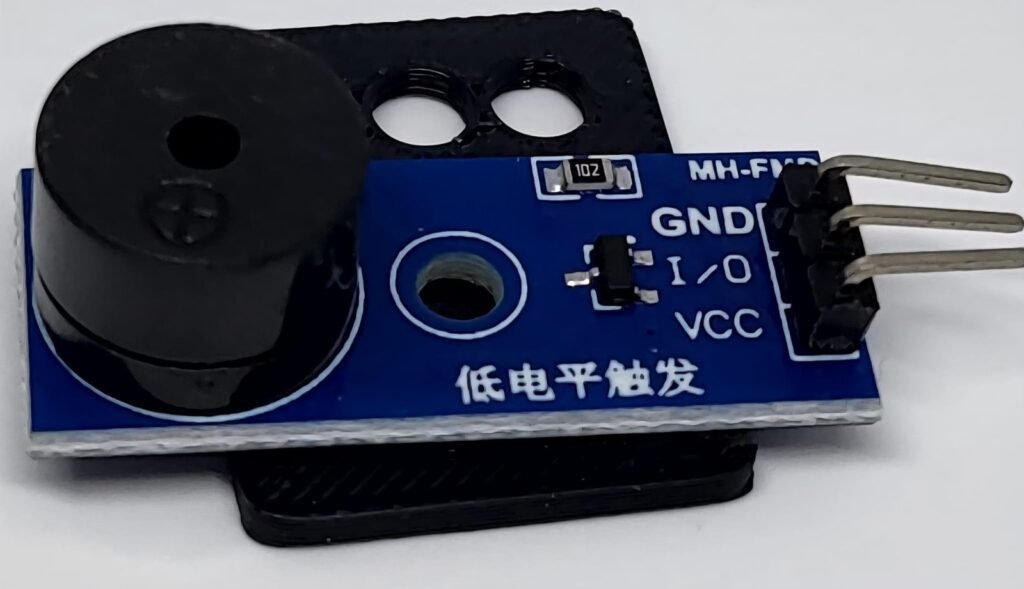

| Sound sensor (digital) | 1 | Signal → Pin 34, VCC, GND |

| L298N motor driver | 1 | Left IN1→18, IN2→19; Right IN3→5, IN4→23 |

| TT motors + wheels | 2 | L298N OUT1/OUT2 (left), OUT3/OUT4 (right) |

| 0.96″ OLED SSD1306 128×64 | 1 | I2C: SCL → Pin 22, SDA → Pin 21, VCC, GND |

| Smartphone (Bluetooth) | 1 | Connect to “Clu‑Bots” |

🔌 Wiring tip: Pins 34–39 are input‑only; use Pin 34 for sound. Keep motor wiring matched left/right to avoid reversed motion.

📍 Pin map snapshot: 34 (sound), 22/21 (OLED), 18/19/5/23 (motors). Others free.

Before you start

- USB cable is plugged in

- Serial monitor is open

- Test print shows:

print("Ready!") # Confirm serial is working so you can see messages

Microprojects 1–5

Microproject 5.11.1 – Voice command recognition (simulated with sound)

Goal: Use claps (sound=1) to print simple “voice” commands: 1 clap → “CMD:FWD”, 2 claps → “CMD:STOP”.

Blocks used:

- Digital input: Read Pin 34.

- Window timing: Count claps inside 800 ms.

- Serial println: Print commands.

Block sequence:

- Wait for first clap (sound=1).

- Start 800 ms window; count extra claps.

- Map count → commands; print them.

MicroPython code:

import machine # Import machine to access Pin hardware

import time # Import time to measure windows

sound = machine.Pin(34, machine.Pin.IN) # Create sound sensor input on Pin 34

print("Microproject 5.11.1: Sound input ready on Pin 34") # Confirm setup

while True: # Continuous listening loop

if sound.value() == 1: # Detect a clap or loud sound

window_start = time.ticks_ms() # Record the start of the counting window

claps = 1 # Count the first clap

print("VOICE:CLAP_1") # Print detection of first clap

time.sleep(0.15) # Debounce to avoid double counting the same clap

while time.ticks_diff(time.ticks_ms(), window_start) < 800: # 800 ms window for more claps

if sound.value() == 1: # Detect an additional clap

claps += 1 # Increase clap count

print("VOICE:CLAP_EXTRA", claps) # Show updated number of claps

time.sleep(0.15) # Debounce after each detection

if claps == 1: # If just one clap in the window

print("CMD:FWD") # Print forward command (simulated voice)

else: # If two or more claps

print("CMD:STOP") # Print stop command (simulated voice)

time.sleep(0.3) # Small pause before listening again

Reflection: Claps become simple voice commands—short windows make them reliable.

Challenge:

- Easy: Map 3 claps to “CMD:LEFT”.

- Harder: Add a “SILENT” mode variable to ignore claps temporarily.

Microproject 5.11.2 – Performing simple tasks (bringing, watching)

Goal: Create basic task functions: BRING (move forward 1 s), WATCH (scan and print status), ANNOUNCE (print a message).

Blocks used:

- Digital outputs: Control L298N IN pins.

- Serial println: Print TASK status.

Block sequence:

- Setup motor pins.

- Define tasks as functions.

- Print clear “TASK:…” messages.

MicroPython code:

import machine # Import machine to control motor pins

import time # Import time to add delays for task duration

left_in1 = machine.Pin(18, machine.Pin.OUT) # Left motor IN1 on Pin 18

left_in2 = machine.Pin(19, machine.Pin.OUT) # Left motor IN2 on Pin 19

right_in3 = machine.Pin(5, machine.Pin.OUT) # Right motor IN3 on Pin 5

right_in4 = machine.Pin(23, machine.Pin.OUT) # Right motor IN4 on Pin 23

print("Microproject 5.11.2: Motors ready (18/19, 5/23)") # Confirm motor setup

def motors_stop(): # Helper to stop both motors

left_in1.value(0) # Left IN1 LOW

left_in2.value(0) # Left IN2 LOW

right_in3.value(0) # Right IN3 LOW

right_in4.value(0) # Right IN4 LOW

print("MOTORS:STOP") # Print stop status

def motors_forward(): # Helper to move forward

left_in1.value(1) # Left IN1 HIGH

left_in2.value(0) # Left IN2 LOW

right_in3.value(1) # Right IN3 HIGH

right_in4.value(0) # Right IN4 LOW

print("MOTORS:FWD") # Print forward status

def task_bring(): # Task: BRING (move forward for 1 second)

print("TASK:BRING_START") # Announce task start

motors_forward() # Move forward

time.sleep(1.0) # Drive forward for one second

motors_stop() # Stop after movement

print("TASK:BRING_DONE") # Announce task completion

def task_watch(): # Task: WATCH (simulate scanning status)

print("TASK:WATCH_START") # Announce task start

print("STATUS:AREA_CLEAR") # Simulated status message

time.sleep(0.5) # Small delay to simulate scanning time

print("TASK:WATCH_DONE") # Announce task completion

def task_announce(text="HELLO"): # Task: ANNOUNCE (say a message)

print("TASK:ANNOUNCE", text) # Print the message to the app/console

Reflection: Simple task functions make your robot feel helpful—clear starts and finishes matter.

Challenge:

- Easy: Add “task_turn_left” that turns 500 ms.

- Harder: Chain BRING → WATCH → ANNOUNCE in a mini routine.

Microproject 5.11.3 – Interaction via app and voice

Goal: Accept commands from both the app (BLE) and voice (claps), choosing safely which to run.

Blocks used:

- Bluetooth callback: Receive app commands.

- Priority rule: Prefer app over voice to avoid conflicts.

- Serial println: Print “SOURCE:APP/VOICE”.

Block sequence:

- Set priority = “APP”.

- On voice “CMD:…”, store last_voice_cmd.

- On app “CMD:…”, store last_app_cmd and execute.

- If only voice present, execute voice.

MicroPython code:

import ble_central # Import BLE central role to scan/connect

import ble_peripheral # Import BLE peripheral role to advertise name

import ble_handle # Import BLE handler to receive messages

ble_c = ble_central.BLESimpleCentral() # Create BLE central object

ble_p = ble_peripheral.BLESimplePeripheral('Clu-Bots') # Create BLE peripheral named 'Clu-Bots'

ble_c.scan() # Start scanning for peripherals

ble_c.connect(name='Clu-Bots') # Connect to 'Clu-Bots' by name

print("Microproject 5.11.3: BLE connected") # Confirm BLE setup

priority = "APP" # Choose to prefer app commands over voice

last_voice_cmd = "" # Store the latest voice command string

last_app_cmd = "" # Store the latest app command string

print("Priority set to:", priority) # Print current priority

def execute(cmd): # Simple executor for a few commands

print("EXEC:", cmd) # Print what will run

if cmd == "BRING": # If BRING task requested

task_bring() # Call bring task

elif cmd == "WATCH": # If WATCH task requested

task_watch() # Call watch task

elif cmd.startswith("ANNOUNCE:"): # If ANNOUNCE with text

task_announce(cmd.split(":", 1)[1]) # Call announce with text

elif cmd == "STOP": # If stop requested

motors_stop() # Stop motors

else: # Unknown command

print("CMD:UNKNOWN", cmd) # Report unknown command

def handle_method(key1, key2, key3, keyx): # BLE receive callback

global last_app_cmd # Declare we update last_app_cmd

msg = str(key1) # Convert payload to text

print("SOURCE:APP", msg) # Label app source

last_app_cmd = msg # Store the latest app command

execute(last_app_cmd) # Execute app command immediately

handle = ble_handle.Handle() # Create BLE handler object

handle.recv(handle_method) # Attach BLE callback to receive messages

# Example voice path integration (call this when claps are interpreted elsewhere)

last_voice_cmd = "STOP" # Simulate a voice STOP command coming in

print("SOURCE:VOICE", last_voice_cmd) # Label voice source

if priority == "APP": # If app has priority

if last_app_cmd: # If an app command exists

execute(last_app_cmd) # Execute app command (wins)

else: # If no app command

execute(last_voice_cmd) # Execute voice command

else: # If voice has priority

execute(last_voice_cmd) # Execute voice command directly

Reflection: A simple priority rule avoids battles—pick who wins and apply it consistently.

Challenge:

- Easy: Switch priority to “VOICE”.

- Harder: Add a LOCK state (2 s) that ignores new commands after execution.

Microproject 5.11.4 – Routine programming

Goal: Create named routines (e.g., MORNING) that run a sequence of tasks in order.

Blocks used:

- List of steps: Store a list like [“ANNOUNCE:Good morning”, “WATCH”, “BRING”].

- Loop: Execute each step.

Block sequence:

- Define routines dict with named lists.

- Write run_routine(name).

- Print ROUTINE progress clearly.

MicroPython code:

routines = { # Define dictionary of named routines

"MORNING": ["ANNOUNCE:Good morning", "WATCH", "BRING"], # Morning sequence

"EVENING": ["ANNOUNCE:Good evening", "WATCH", "STOP"] # Evening sequence

}

print("Microproject 5.11.4: Routines ready:", list(routines.keys())) # Show routine names

def run_routine(name): # Function to run a routine by name

print("ROUTINE:START", name) # Announce routine start

steps = routines.get(name, []) # Get step list or empty if not found

for step in steps: # Iterate through each step

print("ROUTINE:STEP", step) # Print current step

execute(step) # Execute step using the same executor

print("ROUTINE:DONE", name) # Announce routine completion

Reflection: Routines turn multiple taps into one button—easy for daily habits.

Challenge:

- Easy: Add a “SCHOOL” routine with 2–3 steps.

- Harder: Add a pause step like “WAIT:1000” that sleeps for 1 s.

Microproject 5.11.5 – Preference learning mode

Goal: Remember favorite tasks and speeds; adapt future actions (e.g., prefer BRING over WATCH).

Blocks used:

- Variables: prefer_task, prefer_speed.

- Update on use: Increment favorite counts.

- Serial println: Print “PREFER:…”.

Block sequence:

- Track counts per task.

- On execute(cmd), update counts.

- Print current preference.

MicroPython code:

prefer_counts = {"BRING": 0, "WATCH": 0, "ANNOUNCE": 0, "STOP": 0} # Initialize task preference counts

prefer_speed = 60 # Simulated preferred speed percent

print("Microproject 5.11.5: Preference learning initialized") # Confirm setup

def update_preferences(cmd): # Function to update preference data

key = "ANNOUNCE" if cmd.startswith("ANNOUNCE:") else cmd # Normalize ANNOUNCE variants

if key in prefer_counts: # Check if key is tracked

prefer_counts[key] += 1 # Increase usage count

print("PREFER:COUNTS", prefer_counts) # Print updated counts

print("PREFER:SPEED", prefer_speed) # Print preferred speed

def execute_with_learning(cmd): # Wrapper to execute and learn

update_preferences(cmd) # Update preferences first

execute(cmd) # Execute the command using the same executor

Reflection: Preferences help your robot act “your way”—it notices what you ask most.

Challenge:

- Easy: Increase prefer_speed by 10 when BRING runs.

- Harder: If one task count is double the others, make routines start with that task.

Main project – Personal assistant robot

Blocks steps (with glossary)

- Voice (sound sensor): Turn claps into simple “CMD:…” strings.

- Tasks: BRING/WATCH/ANNOUNCE functions with clear start/stop prints.

- Interaction: App BLE callback plus voice priority rules.

- Routines: Named sequences that call tasks in order.

- Learning: Store preferences and adapt actions.

Block sequence:

- Setup sound input, motors, and OLED.

- Define tasks and an execute() function.

- Handle app commands with BLE callback; integrate voice.

- Run routines by name with progress prints.

- Update preferences after each execution.

MicroPython code (mirroring blocks)

# Project 5.11 – Personal Assistant Robot

import machine # Control pins (sound, motors) and I2C (OLED)

import oled128x64 # SSD1306 OLED driver

import ble_central # BLE central role

import ble_peripheral # BLE peripheral role

import ble_handle # BLE handler for callbacks

import time # Timing for windows and delays

# Hardware setup

sound = machine.Pin(34, machine.Pin.IN) # Sound sensor input on Pin 34

left_in1 = machine.Pin(18, machine.Pin.OUT) # Left IN1 motor pin

left_in2 = machine.Pin(19, machine.Pin.OUT) # Left IN2 motor pin

right_in3 = machine.Pin(5, machine.Pin.OUT) # Right IN3 motor pin

right_in4 = machine.Pin(23, machine.Pin.OUT) # Right IN4 motor pin

print("HW: Sound=34, Motors=18/19/5/23") # Confirm hardware pins

i2c = machine.SoftI2C(scl=machine.Pin(22), sda=machine.Pin(21), freq=100000) # I2C bus for OLED

oled = oled128x64.OLED(i2c, address=0x3c, font_address=0x3A0000, types=0) # Initialize OLED display

print("OLED initialized at 0x3C") # Confirm OLED setup

# BLE setup

ble_c = ble_central.BLESimpleCentral() # Create BLE central

ble_p = ble_peripheral.BLESimplePeripheral('Clu-Bots') # Peripheral named 'Clu-Bots'

ble_c.scan() # Scan for BLE device

ble_c.connect(name='Clu-Bots') # Connect to peripheral

handle = ble_handle.Handle() # Create BLE callback handler

print("BLE connected and handler ready") # Confirm BLE

# Preference data

prefer_counts = {"BRING": 0, "WATCH": 0, "ANNOUNCE": 0, "STOP": 0} # Initialize counts

prefer_speed = 60 # Simulated preferred speed percent

print("Preferences initialized") # Confirm preferences

# Priority and last commands

priority = "APP" # Choose app priority over voice

last_app_cmd = "" # Store most recent app command

last_voice_cmd = "" # Store most recent voice command

print("Priority:", priority) # Print chosen priority

# Motor helpers

def motors_stop(): # Stop motors

left_in1.value(0) # Left IN1 LOW

left_in2.value(0) # Left IN2 LOW

right_in3.value(0) # Right IN3 LOW

right_in4.value(0) # Right IN4 LOW

print("MOTORS:STOP") # Print stop

def motors_forward(): # Forward motion

left_in1.value(1) # Left IN1 HIGH

left_in2.value(0) # Left IN2 LOW

right_in3.value(1) # Right IN3 HIGH

right_in4.value(0) # Right IN4 LOW

print("MOTORS:FWD") # Print forward

# Task functions

def task_bring(): # BRING task

print("TASK:BRING_START") # Announce start

oled.clear() # Clear OLED

oled.shows('BRING...', x=0, y=20, size=1, space=0, center=False) # Show task text

oled.show() # Refresh OLED

motors_forward() # Move forward

time.sleep(1.0) # Drive for 1 second

motors_stop() # Stop motors

oled.shows('BRING DONE', x=0, y=35, size=1, space=0, center=False) # Show done text

oled.show() # Refresh OLED

print("TASK:BRING_DONE") # Announce completion

def task_watch(): # WATCH task

print("TASK:WATCH_START") # Announce start

oled.clear() # Clear OLED

oled.shows('WATCH...', x=0, y=20, size=1, space=0, center=False) # Show task text

oled.show() # Refresh OLED

time.sleep(0.5) # Simulate scanning time

oled.shows('AREA CLEAR', x=0, y=35, size=1, space=0, center=False) # Show status

oled.show() # Refresh OLED

print("STATUS:AREA_CLEAR") # Print status

print("TASK:WATCH_DONE") # Announce completion

def task_announce(text="HELLO"): # ANNOUNCE task

print("TASK:ANNOUNCE", text) # Announce message

oled.clear() # Clear OLED

oled.shows('ANNOUNCE:', x=0, y=10, size=1, space=0, center=False) # Label text

oled.shows(text, x=0, y=25, size=1, space=0, center=False) # Show message

oled.show() # Refresh OLED

# Executor with learning

def update_preferences(cmd): # Update preference counters

key = "ANNOUNCE" if cmd.startswith("ANNOUNCE:") else cmd # Normalize announce key

if key in prefer_counts: # If key tracked

prefer_counts[key] += 1 # Increment usage

print("PREFER:COUNTS", prefer_counts) # Print counters

print("PREFER:SPEED", prefer_speed) # Print speed preference

def execute(cmd): # Execute a command

print("EXEC:", cmd) # Print to serial/app

update_preferences(cmd) # Update learning data

if cmd == "BRING": # If bring requested

task_bring() # Run bring task

elif cmd == "WATCH": # If watch requested

task_watch() # Run watch task

elif cmd.startswith("ANNOUNCE:"): # If announce message

task_announce(cmd.split(":", 1)[1]) # Run announce with text

elif cmd == "STOP": # If stop requested

motors_stop() # Stop movement

else: # Unknown

print("CMD:UNKNOWN", cmd) # Report unknown command

# Routines

routines = { # Define named routines

"MORNING": ["ANNOUNCE:Good morning", "WATCH", "BRING"], # Morning steps

"EVENING": ["ANNOUNCE:Good evening", "WATCH", "STOP"] # Evening steps

}

print("Routines:", list(routines.keys())) # Print routine names

def run_routine(name): # Execute a routine by name

print("ROUTINE:START", name) # Announce start

steps = routines.get(name, []) # Get steps or empty

for step in steps: # Iterate steps

print("ROUTINE:STEP", step) # Print current step

execute(step) # Execute step

print("ROUTINE:DONE", name) # Announce completion

# BLE callback for app interaction

def handle_method(key1, key2, key3, keyx): # BLE receive callback

global last_app_cmd # Declare global to store app command

msg = str(key1) # Convert payload to text

print("SOURCE:APP", msg) # Label app message

last_app_cmd = msg # Save latest app command

if msg.startswith("ROUTINE:"): # If routine call

run_routine(msg.split(":", 1)[1]) # Run specific routine

else: # Otherwise treat as task

execute(msg) # Execute task command

handle.recv(handle_method) # Attach BLE receive callback

# Voice integration: convert claps to commands (runs continuously)

def listen_voice_once(): # Listen for claps and return a command

if sound.value() == 1: # Detect first clap

window_start = time.ticks_ms() # Mark window start

claps = 1 # First clap counted

time.sleep(0.15) # Debounce sensor ringing

while time.ticks_diff(time.ticks_ms(), window_start) < 800: # 800 ms window

if sound.value() == 1: # Another clap

claps += 1 # Increase count

time.sleep(0.15) # Debounce

cmd = "BRING" if claps == 1 else "STOP" # Map claps to command

print("SOURCE:VOICE", cmd) # Label voice command

return cmd # Return mapped command

return "" # Return empty if no voice event

# Main loop: choose between app and voice by priority and run

while True: # Continuous assistant loop

voice_cmd = listen_voice_once() # Try to get a voice command

if priority == "APP": # If app priority

if last_app_cmd: # If there is an app command stored

execute(last_app_cmd) # Execute app command

last_app_cmd = "" # Clear after running to avoid repeats

elif voice_cmd: # If voice command exists and no app command

execute(voice_cmd) # Execute voice command

else: # If voice priority

if voice_cmd: # If voice command exists

execute(voice_cmd) # Execute voice command first

elif last_app_cmd: # If app command exists afterward

execute(last_app_cmd) # Execute app command

last_app_cmd = "" # Clear after running

time.sleep(0.2) # Small delay to keep loop responsive without spamming

External explanation

- What it teaches: You built a personal assistant flow: voice claps to commands, app buttons to tasks, routines to chain actions, and preferences to learn what you like.

- Why it works: Sound input provides quick “voice” cues; BLE callbacks deliver app commands fast; clear task functions make actions predictable; routines simplify everyday sequences; preference counters guide future choices.

- Key concept: “Listen → decide → act → learn.”

Story time

Imagine your robot hearing a clap and moving to help, while your app sends a routine to start the day. It watches, announces, and remembers your style—becoming more “you” over time.

Debugging (2)

Debugging 5.11.1 – Misinterpreted commands

Problem: Robot runs the wrong task after a clap or app tap.

Clues: Serial shows SOURCE:VOICE/APP but EXEC prints an unexpected command.

Broken code:

cmd = "STOP" # Default set incorrectly (forces STOP on single clap)

Fixed code:

cmd = "BRING" if claps == 1 else "STOP" # Map 1 clap to BRING, 2+ to STOP

print("MAP:CLAPS->CMD", claps, "->", cmd) # Print mapping for clarity

Why it works: Explicit mapping prevents accidental defaults and shows the logic on serial.

Avoid next time: Always print how signals map to actions.

Debugging 5.11.2 – Unfinished tasks

Problem: Tasks start but don’t cleanly stop or announce completion.

Clues: Motors stay on or OLED doesn’t show “DONE”.

Broken code:

def task_bring():

motors_forward() # Starts motors

# Missing stop and completion prints

Fixed code:

def task_bring():

print("TASK:BRING_START") # Announce start

motors_forward() # Start movement

time.sleep(1.0) # Duration

motors_stop() # Ensure stop

print("TASK:BRING_DONE") # Announce completion

Why it works: A clear start → do → stop → done pattern guarantees tasks finish reliably.

Avoid next time: Add “DONE” prints and stops in every task function.

Final checklist

- Voice claps map to commands and print clearly

- BRING/WATCH/ANNOUNCE tasks start, stop, and report “DONE”

- App commands execute immediately via BLE callback

- Routines run all steps in order and print progress

- Preferences update after each execution and print counts

Extras

- 🧠 Student tip: Add “SAFE MODE” that ignores voice for 5 seconds after any movement.

- 🧑🏫 Instructor tip: Have students sketch task diagrams (start → action → stop → done) before coding—it prevents unfinished tasks.

- 📖 Glossary:

- Priority rule: Policy deciding whether app or voice wins when both send commands.

- Routine: A named sequence of steps run in order.

- Preference learning: Keeping counts or settings that adapt actions to user habits.

- 💡 Mini tips:

- Keep messages short and labeled (TASK, ROUTINE, PREFER).

- Use small delays to avoid spamming the serial monitor.

- Test clap timing near the robot to reduce false detections.